语音合成与识别服务(以下简称“服务”)提供高质量的文本转语音(TTS)和语音转文本(ASR)功能。为了方便用户快速部署和使用该服务,我们提供了基于Docker的部署方案。本文档将详细介绍如何使用Docker部署该服务。

本文提供的语音合成与识别服务,是基于paddlespeech开发的TTS和ASR服务(纯CPU服务),用户可以通过Docker快速部署并使用该服务。

在开始部署之前,请确保您的环境满足以下要求:

1. 已安装Docker和Docker Compose。

2. 具备一定的Linux命令行操作基础。

3. 确保您的服务器具备足够的计算资源(CPU、内存、GPU等)。

我们提供了预构建的Docker镜像,地址位于七牛云,下载地址为:

https://datacdn.data-it.tech/HomeAssistant/dokerimages/paddlespeech1.1/paddlespeech.tar

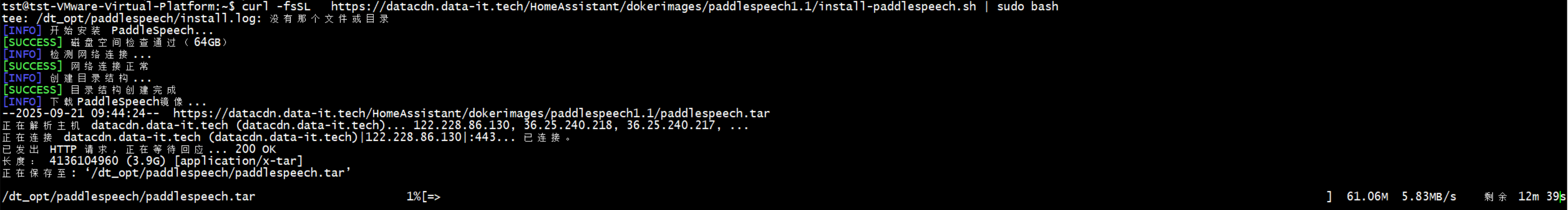

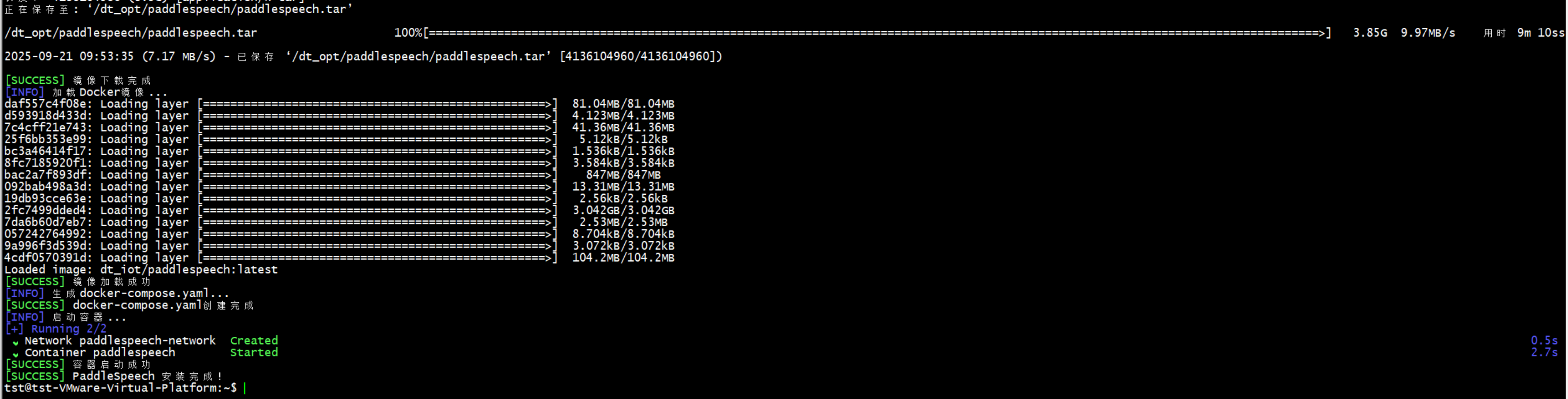

curl -fsSL https://datacdn.data-it.tech/HomeAssistant/dokerimages/paddlespeech1.1/install-paddlespeech.sh | sudo bash

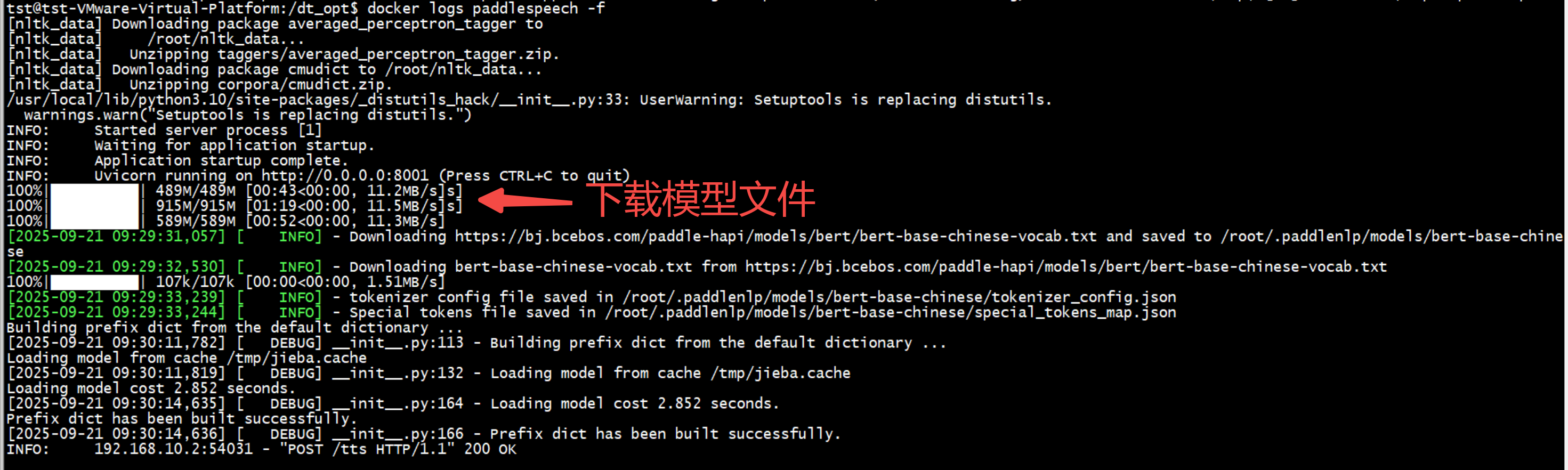

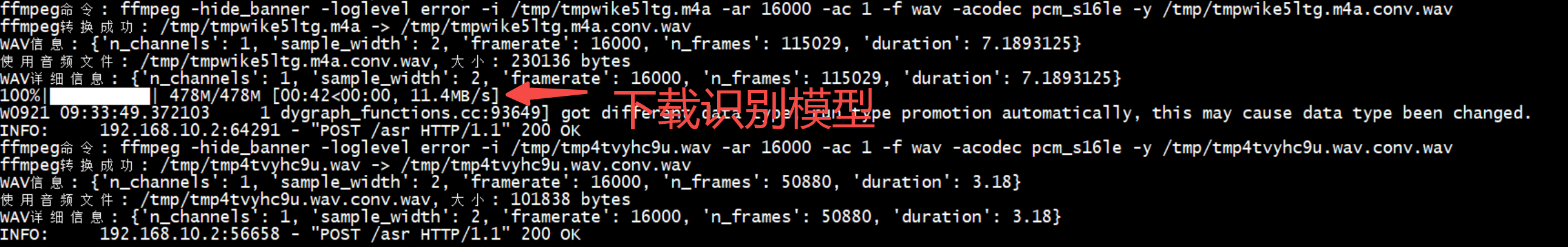

使用TTS或asr服务,首次运行会自动下载模型文件,模型文件较大,***下载过程约需10分钟***,请耐心等待。

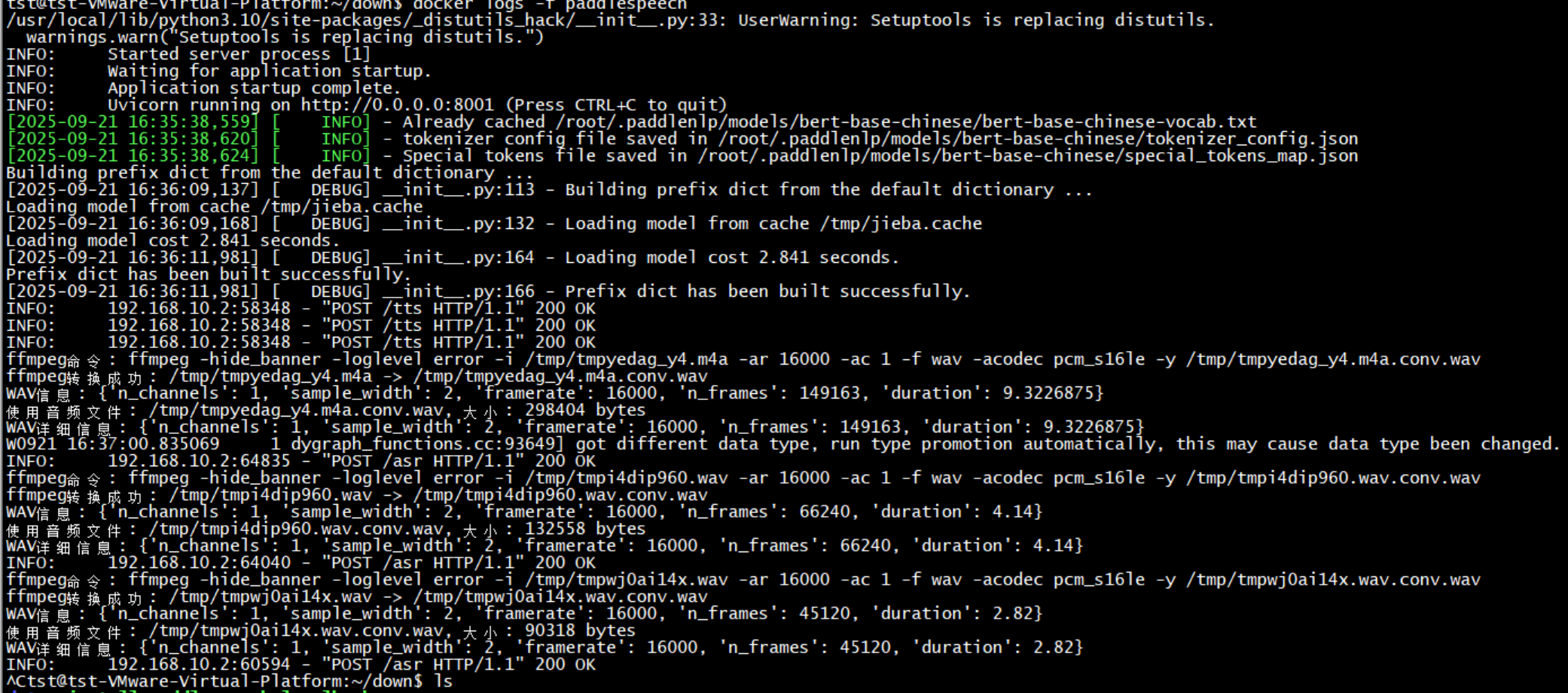

我们可以如用下命令实时查看运行日志:

docker logs -f paddlespeech

TTS模型首次使用时侍自动从网络下载约2G的模型文件,下载完成后会自动启动服务。后续再次使用时,不会重复下载模型文件。

Asr模型首次使用时侍自动从网络下载约1G的模型文件,下载完成后会自动启动服务。后续再次使用时,不会重复下载模型文件。

离线安装包分两部份,一部份是容器镜像,一部份是数据卷文件,安装启动容器前先替换对据卷绑定的目录,这样容器启动后无须下载模型,可以在无互联网的情况下直接使用

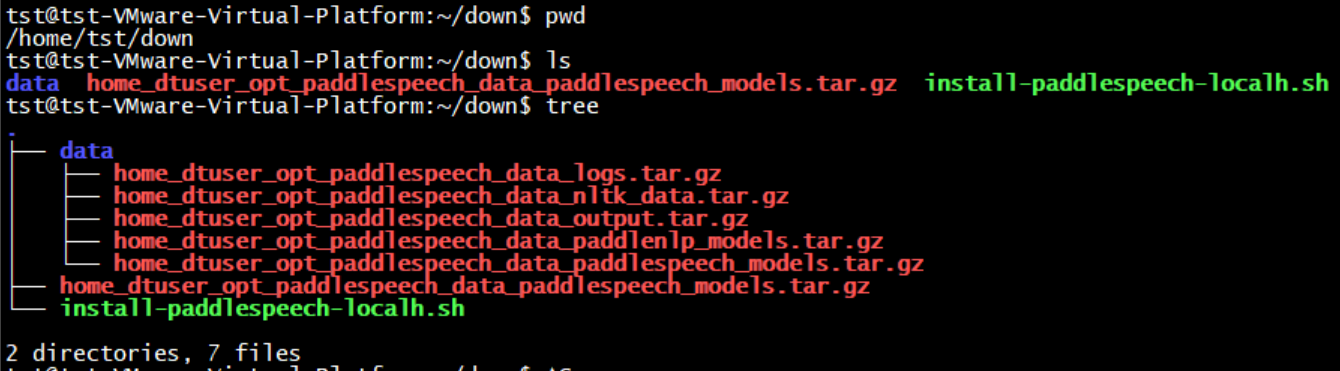

如下示例,我们将文件放到用户目录的down下,请保持相应的目录结构,如下所示:

tst@tst-VMware-Virtual-Platform:~/down$ pwd

/home/tst/down

tst@tst-VMware-Virtual-Platform:~/down$ ls

data install-paddlespeech-localh.sh

tst@tst-VMware-Virtual-Platform:~/down$ tree

.

├── data

│ ├── home_dtuser_opt_paddlespeech_data_logs.tar.gz

│ ├── home_dtuser_opt_paddlespeech_data_nltk_data.tar.gz

│ ├── home_dtuser_opt_paddlespeech_data_output.tar.gz

│ ├── home_dtuser_opt_paddlespeech_data_paddlenlp_models.tar.gz

│ └── home_dtuser_opt_paddlespeech_data_paddlespeech_models.tar.gz

├── home_dtuser_opt_paddlespeech_data_paddlespeech_models.tar.gz

└── install-paddlespeech-localh.sh

2 directories, 7 files

chmod +x install-paddlespeech-localh.sh

sudo ./install-paddlespeech-localh.sh

按装脚本将会先解压文件,并加载docker镜像,然后启动容器,启动完成后,可以使用docker ps命令查看运行状态,如下所示:

tst@tst-VMware-Virtual-Platform:~/down$ sudo ./install-paddlespeech-localh.sh

[INFO] 开始 PaddleSpeech 离线安装...

[SUCCESS] 磁盘空间检查通过(55GB)

[INFO] 检测网络连接...

[SUCCESS] 网络连接正常

[INFO] 创建目录结构...

[SUCCESS] 目录结构创建完成

[INFO] 解压数据卷...

[INFO] 正在解压: /home/tst/down/data/home_dtuser_opt_paddlespeech_data_logs.tar.gz 到 /dt_opt/paddlespeech/data/logs

./

[INFO] 正在解压: /home/tst/down/data/home_dtuser_opt_paddlespeech_data_nltk_data.tar.gz 到 /dt_opt/paddlespeech/data/nltk_data

./

./taggers/

./taggers/averaged_perceptron_tagger/

./taggers/averaged_perceptron_tagger/averaged_perceptron_tagger.pickle

./taggers/averaged_perceptron_tagger.zip

./corpora/

./corpora/cmudict/

./corpora/cmudict/cmudict

./corpora/cmudict/README

./corpora/cmudict.zip

[INFO] 正在解压: /home/tst/down/data/home_dtuser_opt_paddlespeech_data_output.tar.gz 到 /dt_opt/paddlespeech/data/output

./

./2753d658-b228-4ae6-94fc-3404a41f4526.wav

./9f24d71e-9f29-4d60-aa13-677fd19bba46.wav

./38ea94c6-e19b-4e41-8cca-454d8e374a15.wav

./d44aa891-c36b-473f-aef3-44484f0cd74b.wav

./c5ef53d3-502b-4f81-9fdd-583836ba7096.wav

./3931cd47-e022-4385-b588-4a61e64e5eed.wav

./1d213380-eee6-43ba-88db-99ba9b5609df.wav

./c85f65de-a4c1-43aa-a251-52ce4e9df271.wav

./7cd5fc65-665b-444c-9c9f-41f1ce8036f8.wav

./f74dbda7-1cf8-4056-bd30-523b74af8b0e.wav

[INFO] 正在解压: /home/tst/down/data/home_dtuser_opt_paddlespeech_data_paddlenlp_models.tar.gz 到 /dt_opt/paddlespeech/data/paddlenlp_models

./

./packages/

./models/

./models/bert-base-chinese/

./models/bert-base-chinese/bert-base-chinese-vocab.txt

./models/bert-base-chinese/tokenizer_config.json

./models/bert-base-chinese/vocab.txt

./models/bert-base-chinese/special_tokens_map.json

./models/embeddings/

./models/.locks/

./models/.locks/bert-base-chinese/

./datasets/

[INFO] 正在解压: /home/tst/down/data/home_dtuser_opt_paddlespeech_data_paddlespeech_models.tar.gz 到 /dt_opt/paddlespeech/data/paddlespeech_models

./

./conf/

./conf/cache.yaml

./models/

./models/G2PWModel_1.1/

./models/G2PWModel_1.1/config.py

./models/G2PWModel_1.1/MONOPHONIC_CHARS.txt

./models/G2PWModel_1.1/__pycache__/

./models/G2PWModel_1.1/__pycache__/config.cpython-39.pyc

./models/G2PWModel_1.1/__pycache__/config.cpython-310.pyc

./models/G2PWModel_1.1/record.log

./models/G2PWModel_1.1/bopomofo_to_pinyin_wo_tune_dict.json

./models/G2PWModel_1.1/POLYPHONIC_CHARS.txt

./models/G2PWModel_1.1/char_bopomofo_dict.json

./models/G2PWModel_1.1/g2pW.onnx

./models/G2PWModel_1.1/version

./models/G2PWModel_1.1.zip

./models/conformer_wenetspeech-zh-16k/

./models/conformer_wenetspeech-zh-16k/1.0/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/preprocess.yaml

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/tuning/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/tuning/decode.yaml

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/conformer.yaml

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/conf/conformer_infer.yaml

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/data/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/data/lang_char/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/data/lang_char/vocab.txt

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/data/mean_std.json

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/model.yaml

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/exp/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/exp/conformer/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/exp/conformer/checkpoints/

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar/exp/conformer/checkpoints/wenetspeech.pdparams

./models/conformer_wenetspeech-zh-16k/1.0/asr1_conformer_wenetspeech_ckpt_0.1.1.model.tar.gz

./models/fastspeech2_csmsc-zh/

./models/fastspeech2_csmsc-zh/1.0/

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4.zip

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/snapshot_iter_76000.pdz

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/phone_id_map.txt

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/default.yaml

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/speech_stats.npy

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/pitch_stats.npy

./models/fastspeech2_csmsc-zh/1.0/fastspeech2_nosil_baker_ckpt_0.4/energy_stats.npy

./models/hifigan_csmsc-zh/

./models/hifigan_csmsc-zh/1.0/

./models/hifigan_csmsc-zh/1.0/hifigan_csmsc_ckpt_0.1.1.zip

./models/hifigan_csmsc-zh/1.0/hifigan_csmsc_ckpt_0.1.1/

./models/hifigan_csmsc-zh/1.0/hifigan_csmsc_ckpt_0.1.1/default.yaml

./models/hifigan_csmsc-zh/1.0/hifigan_csmsc_ckpt_0.1.1/snapshot_iter_2500000.pdz

./models/hifigan_csmsc-zh/1.0/hifigan_csmsc_ckpt_0.1.1/feats_stats.npy

./datasets/

[SUCCESS] 数据卷解压完成

[INFO] 解压镜像包...

[SUCCESS] 镜像包已移动到 /dt_opt/paddlespeech/images

[INFO] 加载Docker镜像...

daf557c4f08e: Loading layer [==================================================>] 81.04MB/81.04MB

d593918d433d: Loading layer [==================================================>] 4.123MB/4.123MB

7c4cff21e743: Loading layer [==================================================>] 41.36MB/41.36MB

25f6bb353e99: Loading layer [==================================================>] 5.12kB/5.12kB

bc3a46414f17: Loading layer [==================================================>] 1.536kB/1.536kB

8fc7185920f1: Loading layer [==================================================>] 3.584kB/3.584kB

bac2a7f893df: Loading layer [==================================================>] 847MB/847MB

092bab498a3d: Loading layer [==================================================>] 13.31MB/13.31MB

19db93cce63e: Loading layer [==================================================>] 2.56kB/2.56kB

2fc7499dded4: Loading layer [==================================================>] 3.042GB/3.042GB

7da6b60d7eb7: Loading layer [==================================================>] 2.53MB/2.53MB

057242764992: Loading layer [==================================================>] 8.704kB/8.704kB

9a996f3d539d: Loading layer [==================================================>] 3.072kB/3.072kB

4cdf0570391d: Loading layer [==================================================>] 104.2MB/104.2MB

Loaded image: dt_iot/paddlespeech:latest

[SUCCESS] 镜像加载成功

[INFO] 生成docker-compose.yaml...

[SUCCESS] docker-compose.yaml创建完成

[INFO] 启动容器...

[+] Running 2/2

✔ Network dtnet Created 0.5s

✔ Container paddlespeech Started 4.6s

[SUCCESS] 容器启动成功

[SUCCESS] PaddleSpeech 离线安装完成!

docker ps

docker logs -f paddlespeech

进入容器器的部署目录:

/dt_opt/paddlespeech

docker ps up -d # 启动容器

docker ps down # 停止容器

docker ps restart # 重启容器

docker ps logs -f paddlespeech # 实时查看日志

docker ps exec -it paddlespeech /bin/bash # 进入容器

docker ps rm -f paddlespeech # 删除容器

docker rmi dt_iot/paddlespeech:latest # 删除镜像

docker volume rm paddlespeech_data # 删除数据卷

docker network rm dtnet # 删除网络

docker system prune -a # 清理无用的镜像、容器、数据卷、网络等

以上是常用命令,使用时根据需要灵活使用。

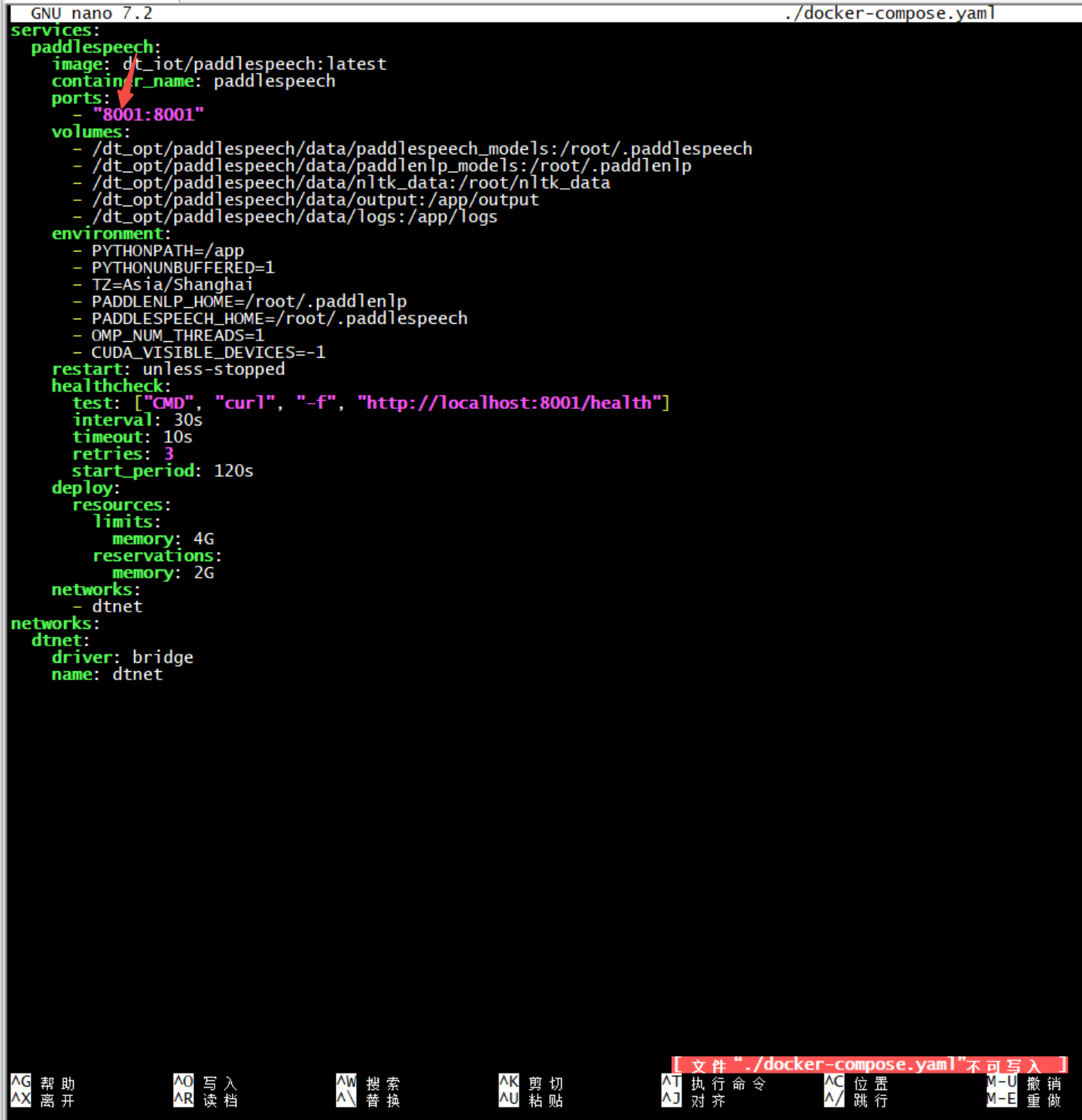

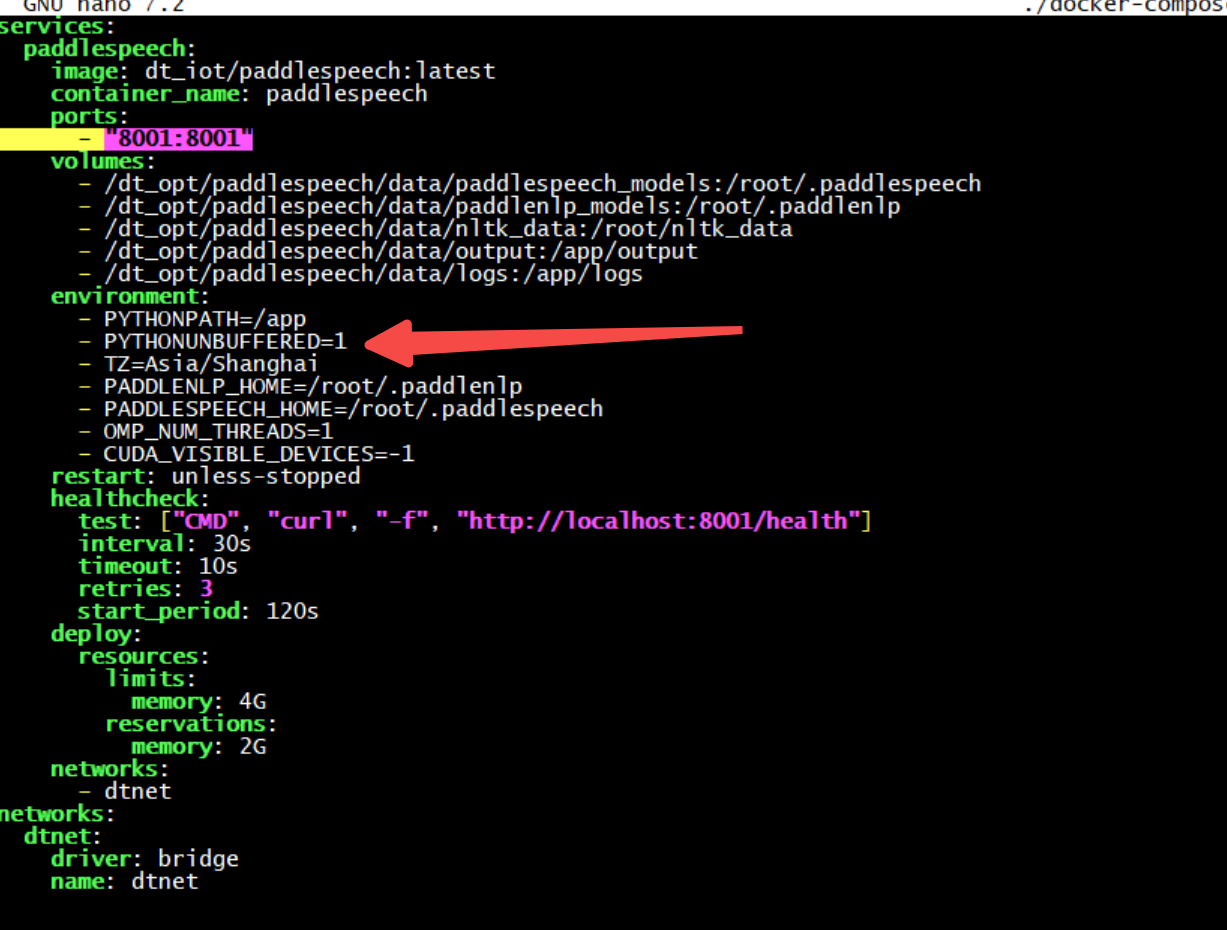

找到文件

/dt_opt/paddlespeech/docker-compose.yaml

修改对应的端口号,如下所示:

更改第一个端口为需要的端口号即可,如下所示:

docker-compse down

sudo nano ./docker-compose.yaml

# 修改对应的端口号,第6行

# - "8001:8001"

# 保存退出,重启容器即可生效

docker-compose up -d

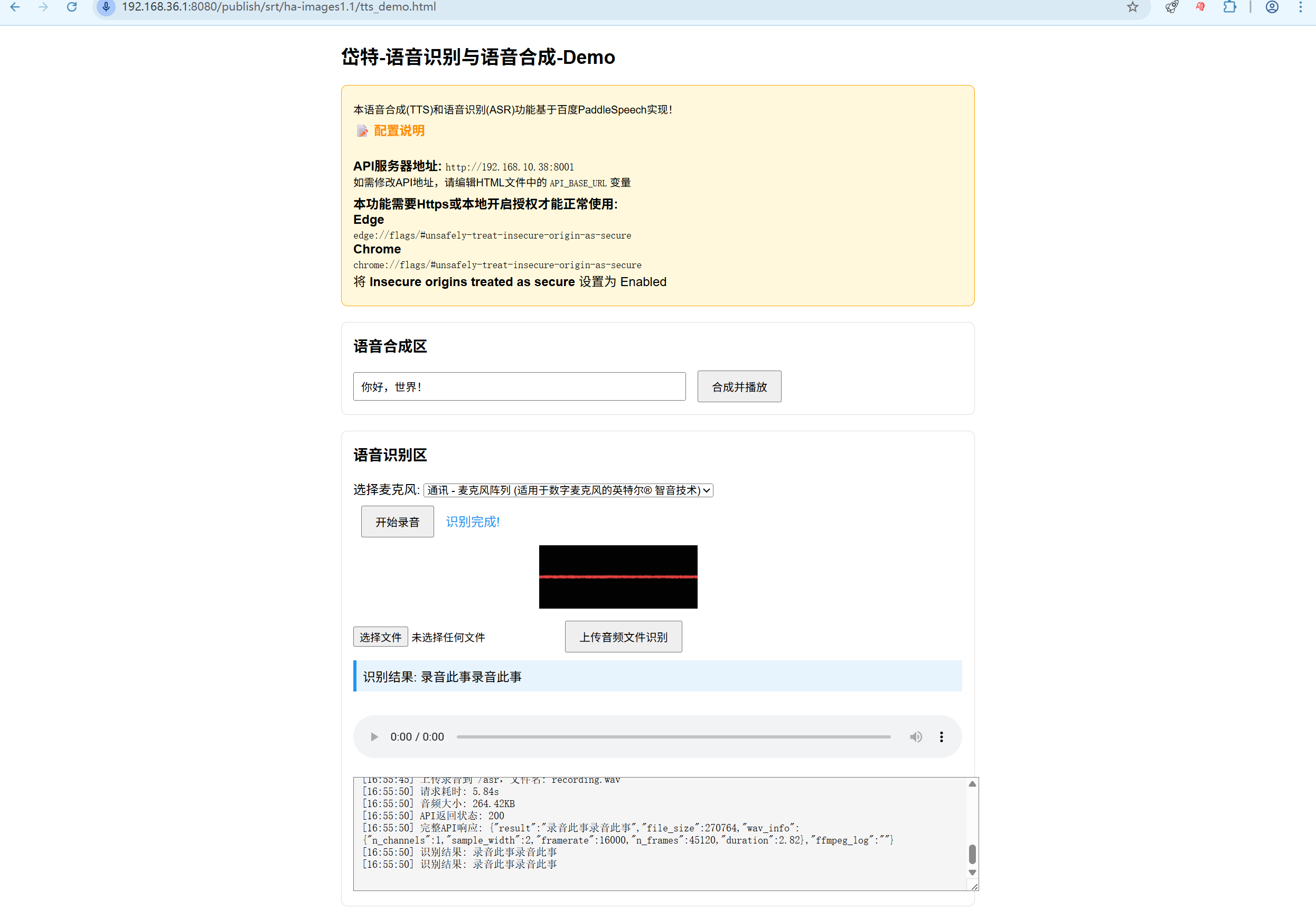

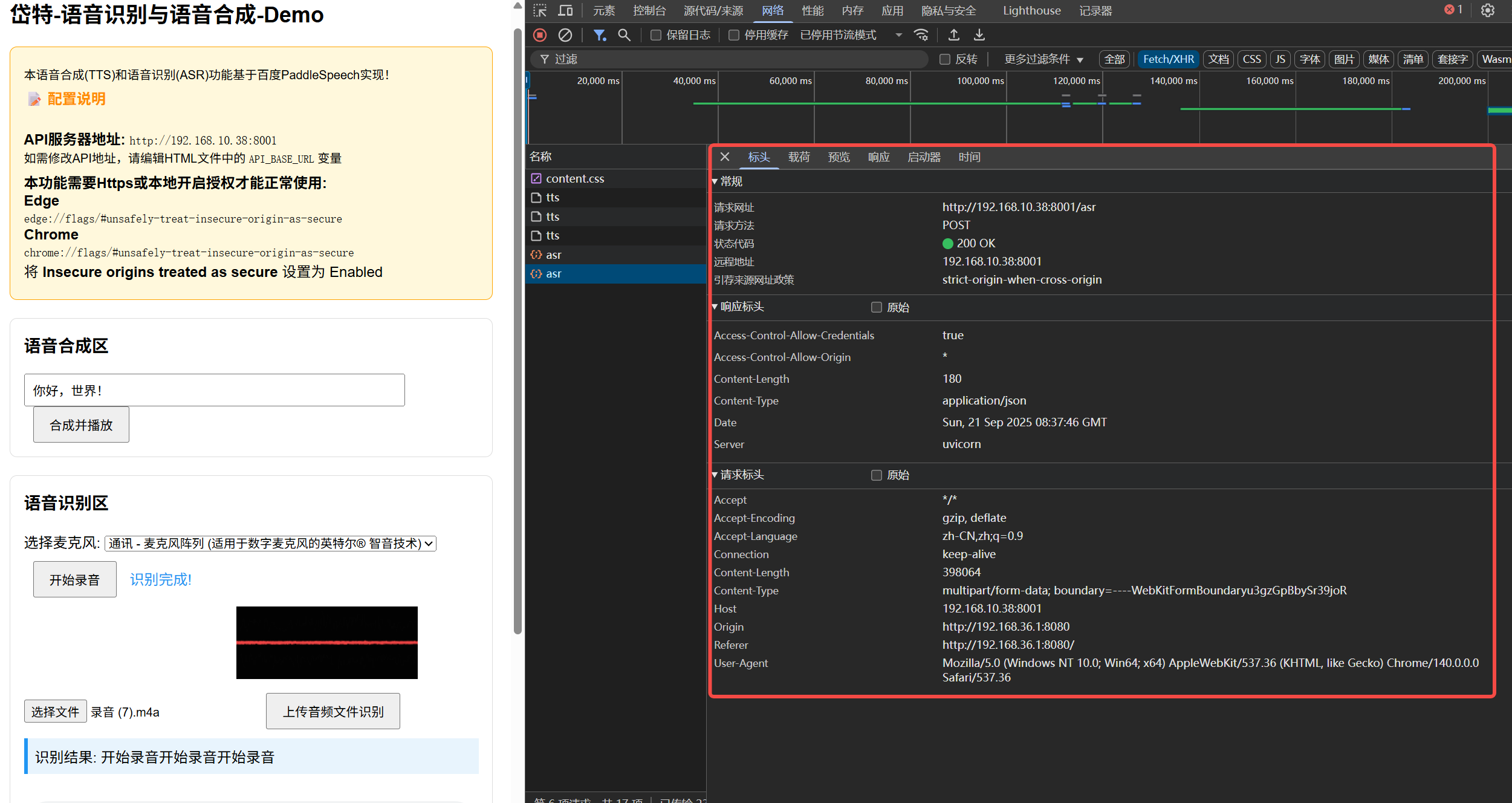

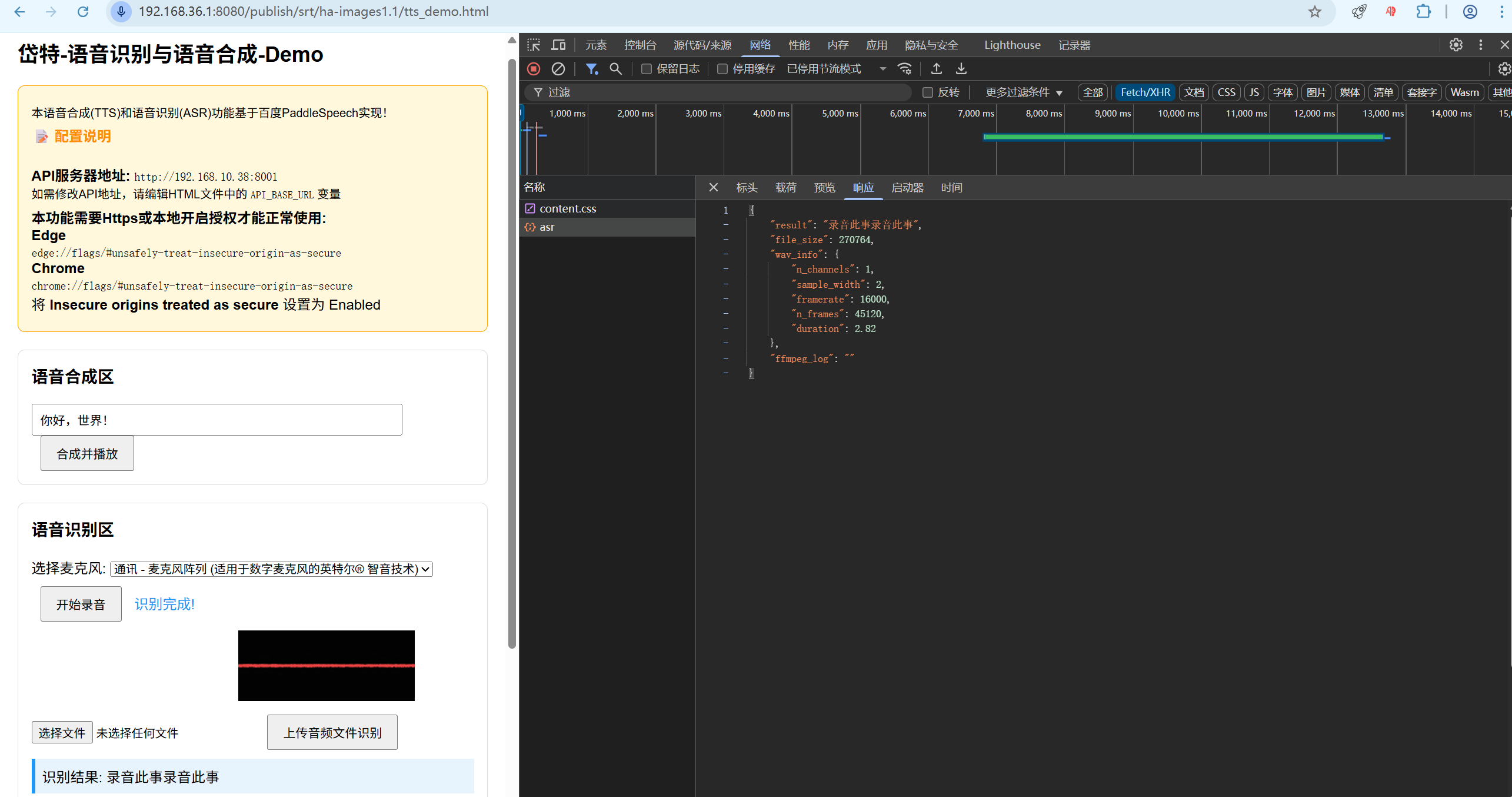

为了方便的演示如何调用这个api,我们提供了一个测试网页,下载地址为:

https://datacdn.data-it.tech/HomeAssistant/dokerimages/paddlespeech1.1/tts_demo.zip

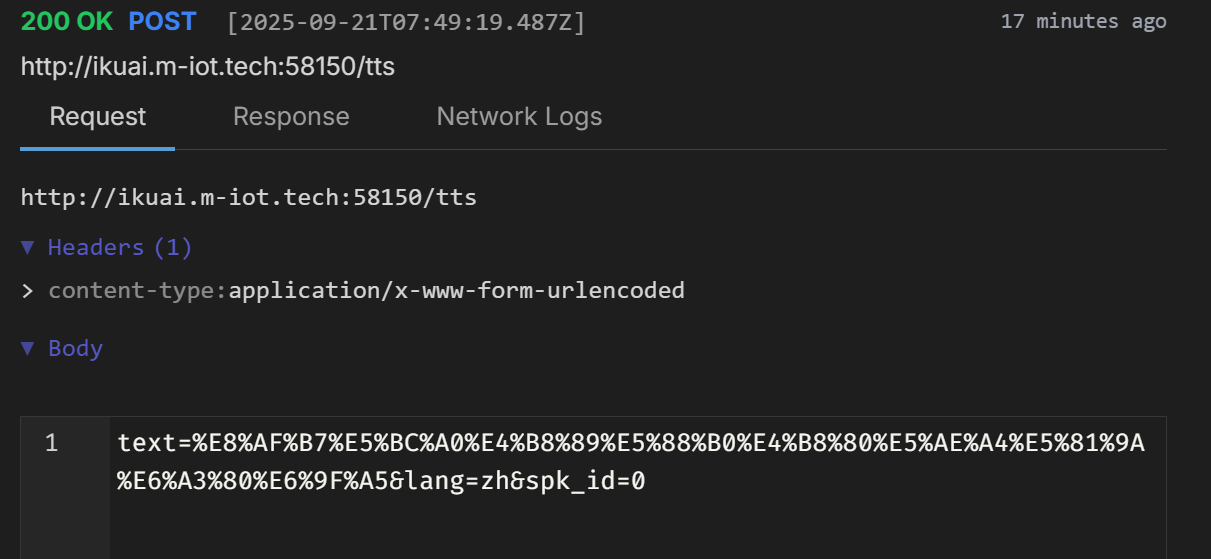

curl --request POST \

--url http://ikuai.m-iot.tech:58150/tts \

--header 'content-type: application/x-www-form-urlencoded' \

--data 'text=请张三到一室做检查' \

--data lang=zh \

--data spk_id=0

POST /tts HTTP/1.1

Content-Type: application/x-www-form-urlencoded

Host: ikuai.m-iot.tech:58150

Content-Length: 103

text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0

//asynchttp

AsyncHttpClient client = new DefaultAsyncHttpClient();

client.prepare("POST", "http://ikuai.m-iot.tech:58150/tts")

.setHeader("content-type", "application/x-www-form-urlencoded")

.setBody("text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0")

.execute()

.toCompletableFuture()

.thenAccept(System.out::println)

.join();

client.close();

//nethttp

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("http://ikuai.m-iot.tech:58150/tts"))

.header("content-type", "application/x-www-form-urlencoded")

.method("POST", HttpRequest.BodyPublishers.ofString("text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0"))

.build();

HttpResponse<String> response = HttpClient.newHttpClient().send(request, HttpResponse.BodyHandlers.ofString());

System.out.println(response.body());

//okhttp

OkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("application/x-www-form-urlencoded");

RequestBody body = RequestBody.create(mediaType, "text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0");

Request request = new Request.Builder()

.url("http://ikuai.m-iot.tech:58150/tts")

.post(body)

.addHeader("content-type", "application/x-www-form-urlencoded")

.build();

Response response = client.newCall(request).execute();

//unirest

HttpResponse<String> response = Unirest.post("http://ikuai.m-iot.tech:58150/tts")

.header("content-type", "application/x-www-form-urlencoded")

.body("text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0")

.asString();

//Xhr

const data = 'text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0';

const xhr = new XMLHttpRequest();

xhr.withCredentials = true;

xhr.addEventListener('readystatechange', function () {

if (this.readyState === this.DONE) {

console.log(this.responseText);

}

});

xhr.open('POST', 'http://ikuai.m-iot.tech:58150/tts');

xhr.setRequestHeader('content-type', 'application/x-www-form-urlencoded');

xhr.send(data);

//Axios

import axios from 'axios';

const encodedParams = new URLSearchParams();

encodedParams.set('text', '请张三到一室做检查');

encodedParams.set('lang', 'zh');

encodedParams.set('spk_id', '0');

const options = {

method: 'POST',

url: 'http://ikuai.m-iot.tech:58150/tts',

headers: {'content-type': 'application/x-www-form-urlencoded'},

data: encodedParams,

};

try {

const { data } = await axios.request(options);

console.log(data);

} catch (error) {

console.error(error);

}

//Fetch

const url = 'http://ikuai.m-iot.tech:58150/tts';

const options = {

method: 'POST',

headers: {'content-type': 'application/x-www-form-urlencoded'},

body: new URLSearchParams({text: '请张三到一室做检查', lang: 'zh', spk_id: '0'})

};

try {

const response = await fetch(url, options);

const data = await response.json();

console.log(data);

} catch (error) {

console.error(error);

}

//JQuery

const settings = {

async: true,

crossDomain: true,

url: 'http://ikuai.m-iot.tech:58150/tts',

method: 'POST',

headers: {

'content-type': 'application/x-www-form-urlencoded'

},

data: {

text: '请张三到一室做检查',

lang: 'zh',

spk_id: '0'

}

};

$.ajax(settings).done(function (response) {

console.log(response);

});

//httpclient

using System.Net.Http.Headers;

var client = new HttpClient();

var request = new HttpRequestMessage

{

Method = HttpMethod.Post,

RequestUri = new Uri("http://ikuai.m-iot.tech:58150/tts"),

Content = new FormUrlEncodedContent(new Dictionary<string, string>

{

{ "text", "请张三到一室做检查" },

{ "lang", "zh" },

{ "spk_id", "0" },

}),

};

using (var response = await client.SendAsync(request))

{

response.EnsureSuccessStatusCode();

var body = await response.Content.ReadAsStringAsync();

Console.WriteLine(body);

}

//restsharp

var client = new RestClient("http://ikuai.m-iot.tech:58150/tts");

var request = new RestRequest("", Method.Post);

request.AddHeader("content-type", "application/x-www-form-urlencoded");

request.AddParameter("application/x-www-form-urlencoded", "text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0", ParameterType.RequestBody);

var response = client.Execute(request);

package main

import (

"fmt"

"strings"

"net/http"

"io"

)

func main() {

url := "http://ikuai.m-iot.tech:58150/tts"

payload := strings.NewReader("text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("content-type", "application/x-www-form-urlencoded")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := io.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}

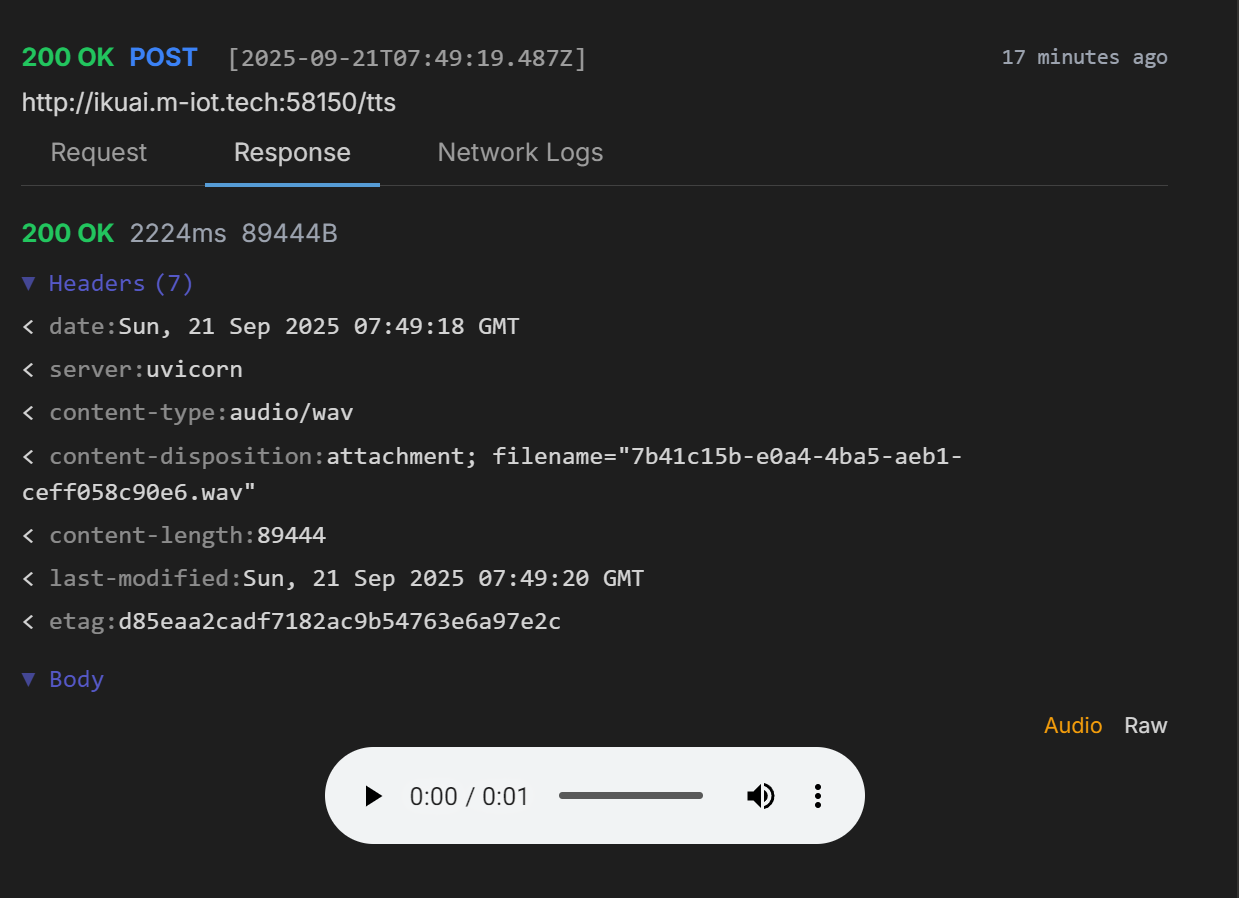

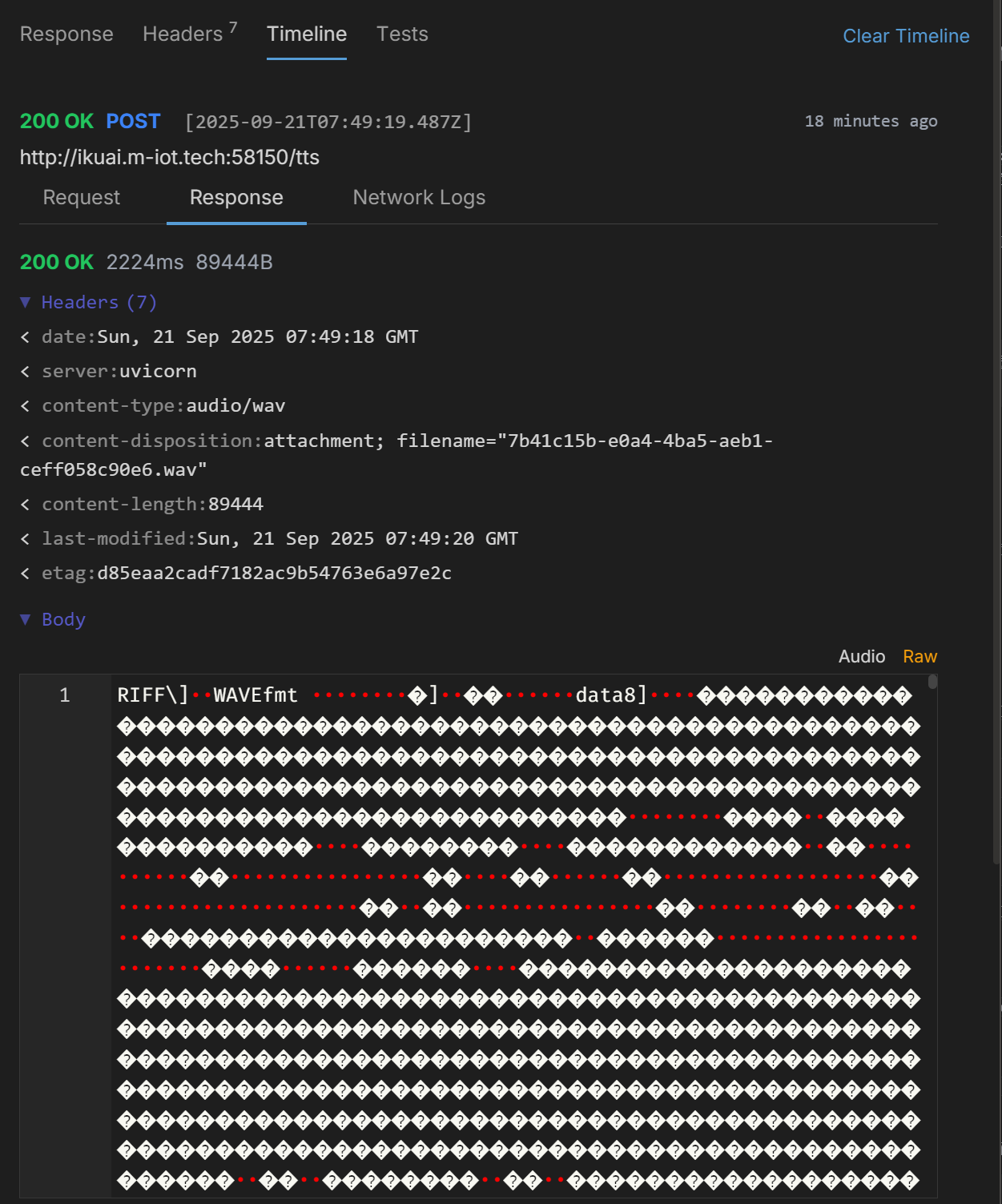

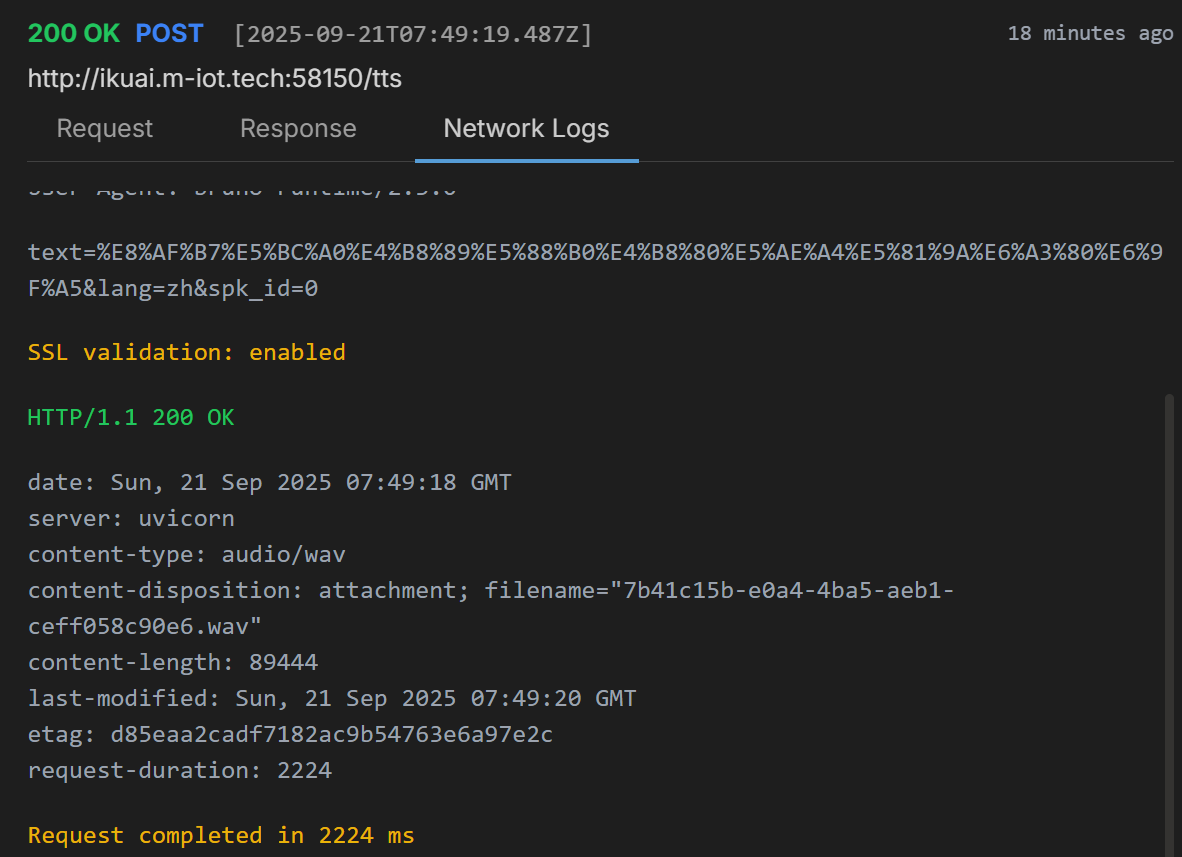

Preparing request to http://ikuai.m-iot.tech:58150/tts

Current time is 2025-09-21T07:49:17.236Z

POST http://ikuai.m-iot.tech:58150/tts

Accept: application/json, text/plain, */*

Content-Type: application/x-www-form-urlencoded

User-Agent: bruno-runtime/2.3.0

text=%E8%AF%B7%E5%BC%A0%E4%B8%89%E5%88%B0%E4%B8%80%E5%AE%A4%E5%81%9A%E6%A3%80%E6%9F%A5&lang=zh&spk_id=0

SSL validation: enabled

HTTP/1.1 200 OK

date: Sun, 21 Sep 2025 07:49:18 GMT

server: uvicorn

content-type: audio/wav

content-disposition: attachment; filename="7b41c15b-e0a4-4ba5-aeb1-ceff058c90e6.wav"

content-length: 89444

last-modified: Sun, 21 Sep 2025 07:49:20 GMT

etag: d85eaa2cadf7182ac9b54763e6a97e2c

request-duration: 2224

Request completed in 2224 ms

curl --request POST \

--url http://ikuai.m-iot.tech:58150/asr \

--header 'content-type: multipart/form-data' \

--form 'file=@C:\Users\trphoenix\Documents\录音\录音 (6).m4a' \

--form lang=zh \

--form format=wav \

--form sample_rate=16000

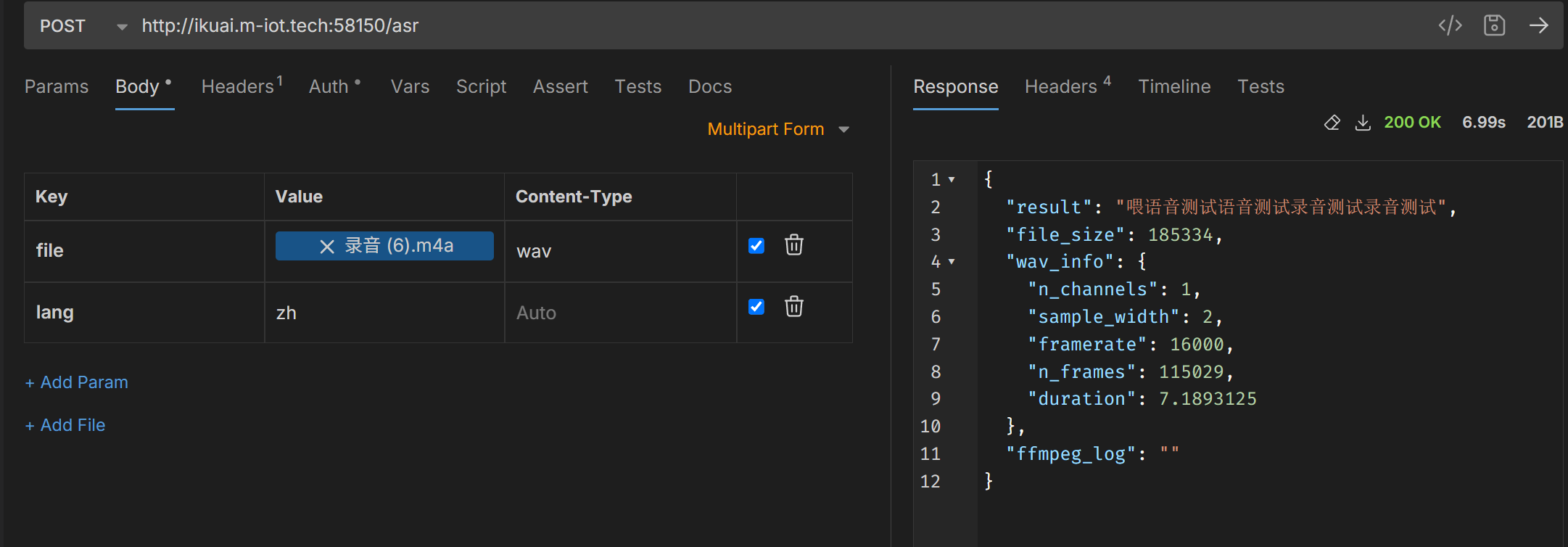

直接上传文件,文件格式支持wav、m4a、mp3等常见音频格式,服务端会自动转换为wav格式进行识别。curl curl --request POST \ --url http://ikuai.m-iot.tech:58150/asr \ --header 'content-type: multipart/form-data' \ --form 'file=@C:\Users\trphoenix\Documents\录音\录音 (6).m4a' \ --form lang=zh

传入参数可以只有file与lang这两个参数,其它参数,可以忽略,如下图所示

POST /asr HTTP/1.1

Content-Type: multipart/form-data; boundary=---011000010111000001101001

Host: ikuai.m-iot.tech:58150

Content-Length: 239

-----011000010111000001101001

Content-Disposition: form-data; name="file"

C:\Users\trphoenix\Documents\录音\录音 (6).m4a

-----011000010111000001101001

Content-Disposition: form-data; name="lang"

zh

-----011000010111000001101001--

// asynchttp

AsyncHttpClient client = new DefaultAsyncHttpClient();

client.prepare("POST", "http://ikuai.m-iot.tech:58150/asr")

.setHeader("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.setBody("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n")

.execute()

.toCompletableFuture()

.thenAccept(System.out::println)

.join();

client.close();

// nethttp

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("http://ikuai.m-iot.tech:58150/asr"))

.header("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.method("POST", HttpRequest.BodyPublishers.ofString("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n"))

.build();

HttpResponse<String> response = HttpClient.newHttpClient().send(request, HttpResponse.BodyHandlers.ofString());

System.out.println(response.body());

// okhttp

OkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("multipart/form-data; boundary=---011000010111000001101001");

RequestBody body = RequestBody.create(mediaType, "-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n");

Request request = new Request.Builder()

.url("http://ikuai.m-iot.tech:58150/asr")

.post(body)

.addHeader("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.build();

Response response = client.newCall(request).execute();

// unirest

HttpResponse<String> response = Unirest.post("http://ikuai.m-iot.tech:58150/asr")

.header("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.body("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n")

.asString();

//xhr

const data = new FormData();

data.append('file', 'C:\Users\trphoenix\Documents\录音\录音 (6).m4a');

data.append('lang', 'zh');

const xhr = new XMLHttpRequest();

xhr.withCredentials = true;

xhr.addEventListener('readystatechange', function () {

if (this.readyState === this.DONE) {

console.log(this.responseText);

}

});

xhr.open('POST', 'http://ikuai.m-iot.tech:58150/asr');

xhr.send(data);

// Axios

import axios from 'axios';

const form = new FormData();

form.append('file', 'C:\Users\trphoenix\Documents\录音\录音 (6).m4a');

form.append('lang', 'zh');

const options = {

method: 'POST',

url: 'http://ikuai.m-iot.tech:58150/asr',

headers: {'content-type': 'multipart/form-data; boundary=---011000010111000001101001'},

data: '[form]'

};

try {

const { data } = await axios.request(options);

console.log(data);

} catch (error) {

console.error(error);

}

// fetch

const url = 'http://ikuai.m-iot.tech:58150/asr';

const form = new FormData();

form.append('file', 'C:\Users\trphoenix\Documents\录音\录音 (6).m4a');

form.append('lang', 'zh');

const options = {method: 'POST'};

options.body = form;

try {

const response = await fetch(url, options);

const data = await response.json();

console.log(data);

} catch (error) {

console.error(error);

}

//jquery

const form = new FormData();

form.append('file', 'C:\Users\trphoenix\Documents\录音\录音 (6).m4a');

form.append('lang', 'zh');

const settings = {

async: true,

crossDomain: true,

url: 'http://ikuai.m-iot.tech:58150/asr',

method: 'POST',

headers: {},

processData: false,

contentType: false,

mimeType: 'multipart/form-data',

data: form

};

$.ajax(settings).done(function (response) {

console.log(response);

});

//httpclient

using System.Net.Http.Headers;

var client = new HttpClient();

var request = new HttpRequestMessage

{

Method = HttpMethod.Post,

RequestUri = new Uri("http://ikuai.m-iot.tech:58150/asr"),

Content = new MultipartFormDataContent

{

new StringContent(["C:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a"])

{

Headers =

{

ContentDisposition = new ContentDispositionHeaderValue("form-data")

{

Name = "file",

FileName = "C:\Users\trphoenix\Documents\录音\录音 (6).m4a",

}

}

},

new StringContent("zh")

{

Headers =

{

ContentDisposition = new ContentDispositionHeaderValue("form-data")

{

Name = "lang",

}

}

},

},

};

using (var response = await client.SendAsync(request))

{

response.EnsureSuccessStatusCode();

var body = await response.Content.ReadAsStringAsync();

Console.WriteLine(body);

}

//Restsharp

var client = new RestClient("http://ikuai.m-iot.tech:58150/asr");

var request = new RestRequest("", Method.Post);

request.AddHeader("content-type", "multipart/form-data; boundary=---011000010111000001101001");

request.AddParameter("multipart/form-data; boundary=---011000010111000001101001", "-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n", ParameterType.RequestBody);

var response = client.Execute(request);

package main

import (

"fmt"

"strings"

"net/http"

"io"

)

func main() {

url := "http://ikuai.m-iot.tech:58150/asr"

payload := strings.NewReader("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n")

req, _ := http.NewRequest("POST", url, payload)

req.Header.Add("content-type", "multipart/form-data; boundary=---011000010111000001101001")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := io.ReadAll(res.Body)

fmt.Println(res)

fmt.Println(string(body))

}

// asynchttp

AsyncHttpClient client = new DefaultAsyncHttpClient();

client.prepare("POST", "http://ikuai.m-iot.tech:58150/asr")

.setHeader("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.setBody("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n")

.execute()

.toCompletableFuture()

.thenAccept(System.out::println)

.join();

client.close();

// nethttp

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create("http://ikuai.m-iot.tech:58150/asr"))

.header("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.method("POST", HttpRequest.BodyPublishers.ofString("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n"))

.build();

HttpResponse<String> response = HttpClient.newHttpClient().send(request, HttpResponse.BodyHandlers.ofString());

System.out.println(response.body());

// okhttp

OkHttpClient client = new OkHttpClient();

MediaType mediaType = MediaType.parse("multipart/form-data; boundary=---011000010111000001101001");

RequestBody body = RequestBody.create(mediaType, "-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n");

Request request = new Request.Builder()

.url("http://ikuai.m-iot.tech:58150/asr")

.post(body)

.addHeader("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.build();

Response response = client.newCall(request).execute();

// unirest

HttpResponse<String> response = Unirest.post("http://ikuai.m-iot.tech:58150/asr")

.header("content-type", "multipart/form-data; boundary=---011000010111000001101001")

.body("-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"file\"\r\n\r\nC:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a\r\n-----011000010111000001101001\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\nzh\r\n-----011000010111000001101001--\r\n\r\n")

.asString();

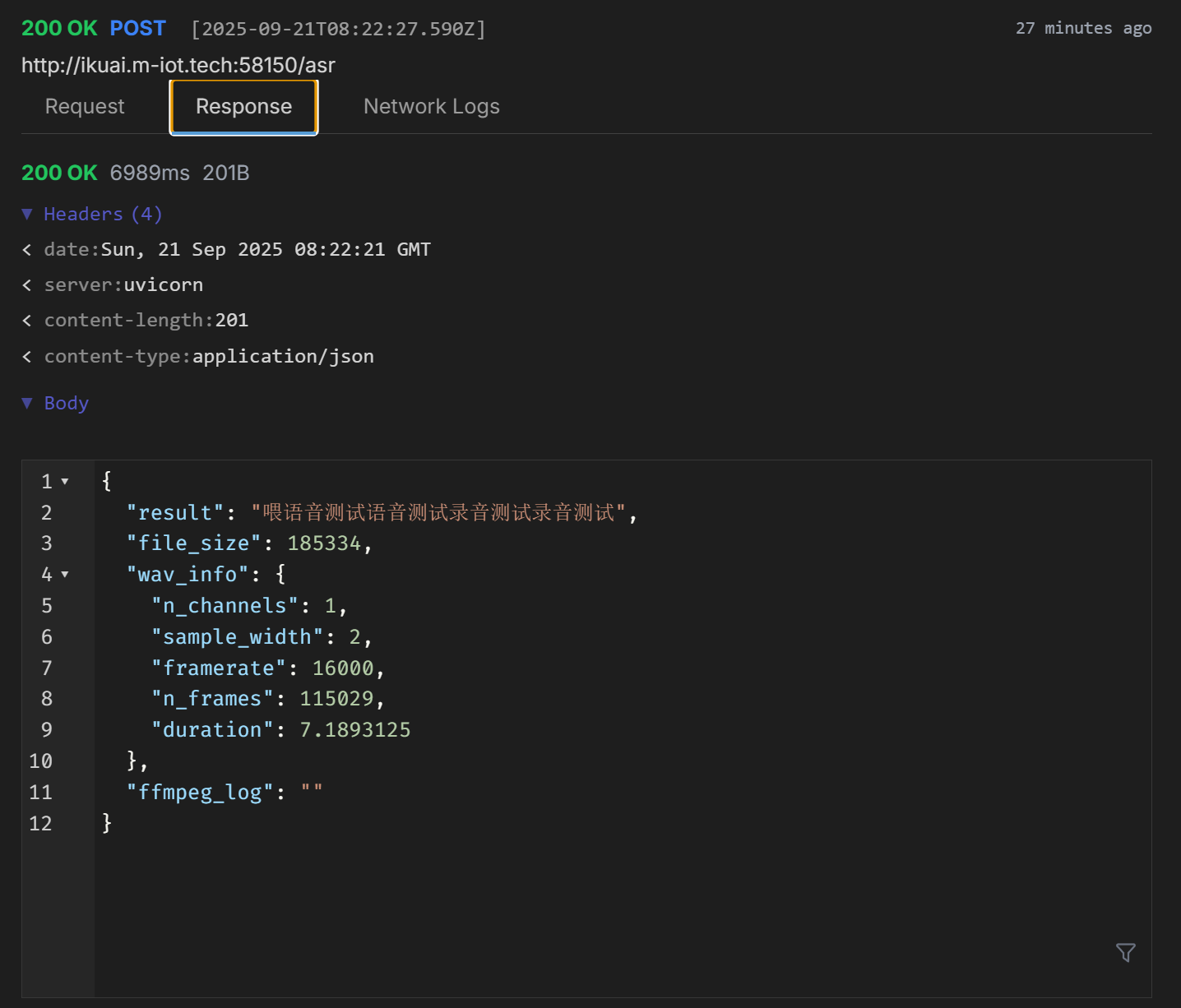

{

"result": "喂语音测试语音测试录音测试录音测试",

"file_size": 185334,

"wav_info": {

"n_channels": 1,

"sample_width": 2,

"framerate": 16000,

"n_frames": 115029,

"duration": 7.1893125

},

"ffmpeg_log": ""

}

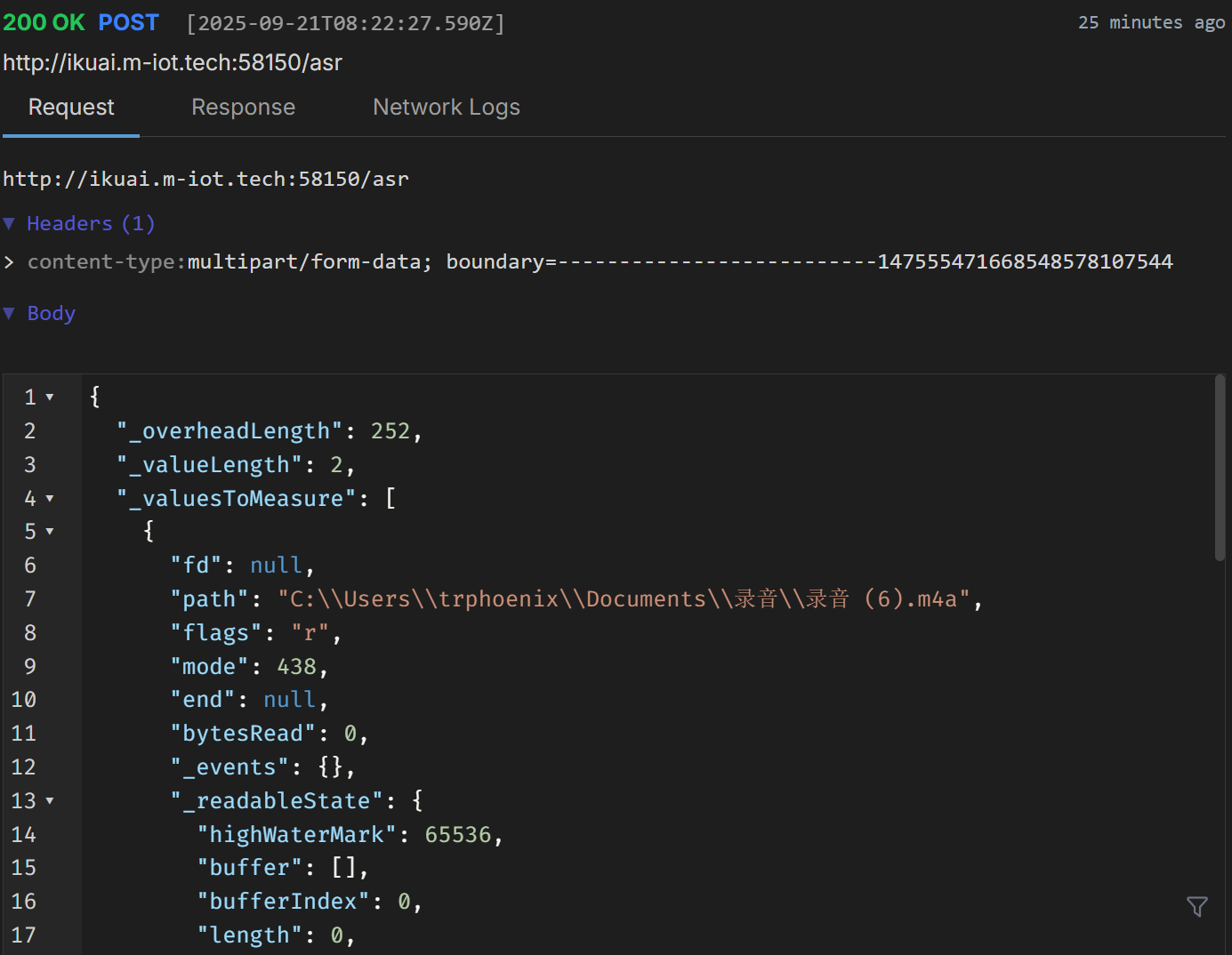

body

{

"_overheadLength": 252,

"_valueLength": 2,

"_valuesToMeasure": [

{

"fd": null,

"path": "C:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a",

"flags": "r",

"mode": 438,

"end": null,

"bytesRead": 0,

"_events": {},

"_readableState": {

"highWaterMark": 65536,

"buffer": [],

"bufferIndex": 0,

"length": 0,

"pipes": [],

"awaitDrainWriters": null

},

"_eventsCount": 3

}

],

"writable": false,

"readable": true,

"dataSize": 0,

"maxDataSize": 2097152,

"pauseStreams": true,

"_released": false,

"_streams": [

"----------------------------147555471668548578107544\r\nContent-Disposition: form-data; name=\"file\"; filename=\"录音 (6).m4a\"\r\nContent-Type: wav\r\n\r\n",

{

"source": "[Circular]",

"dataSize": 0,

"maxDataSize": null,

"pauseStream": true,

"_maxDataSizeExceeded": false,

"_released": false,

"_bufferedEvents": [

{

"0": "pause"

}

],

"_events": {},

"_eventsCount": 1

},

null,

"----------------------------147555471668548578107544\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\n",

"zh",

null

],

"_currentStream": null,

"_insideLoop": false,

"_pendingNext": false,

"_boundary": "--------------------------147555471668548578107544"

}

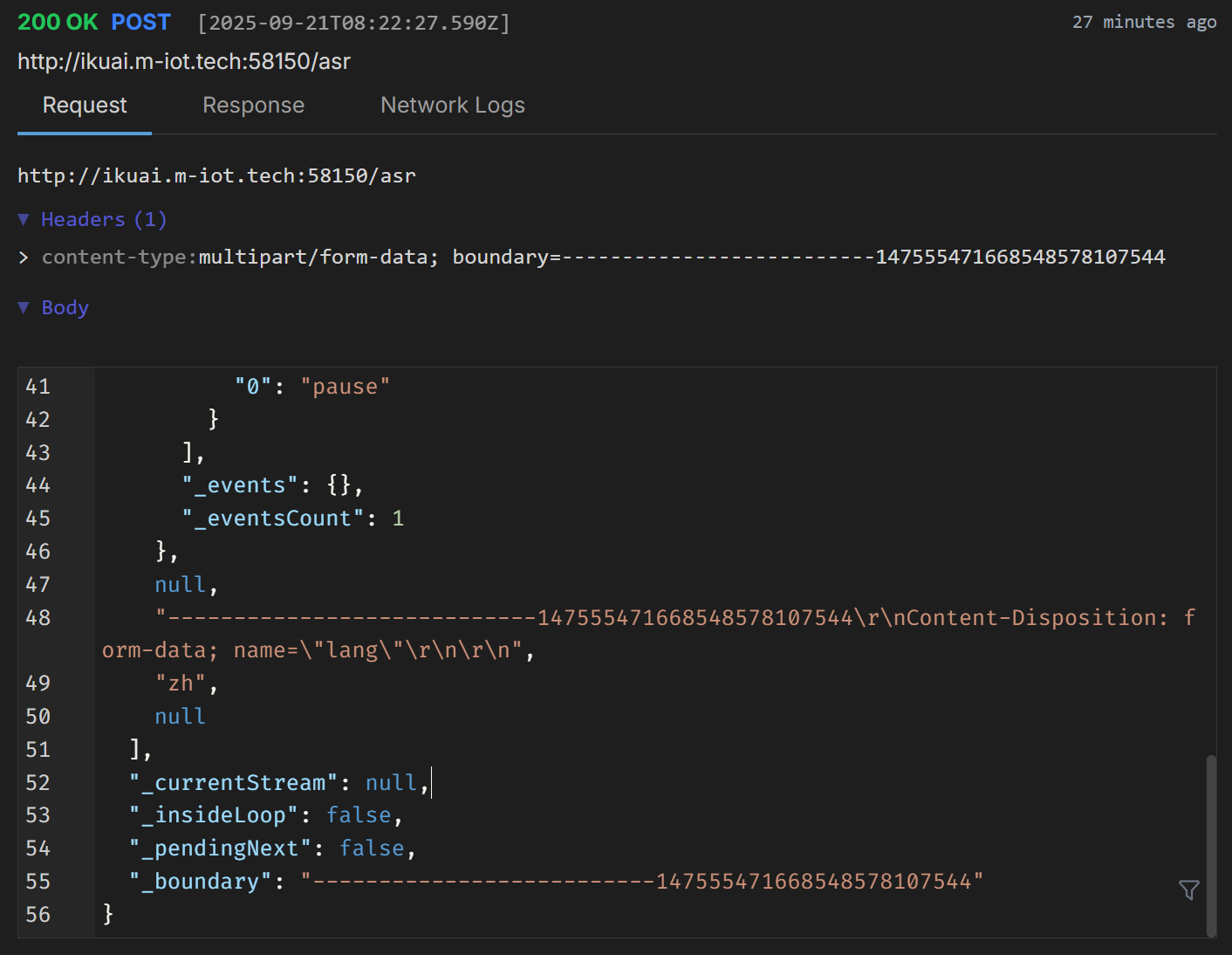

***Network Logs *** Preparing request to http://ikuai.m-iot.tech:58150/asr Current time is 2025-09-21T08:22:20.590Z POST http://ikuai.m-iot.tech:58150/asr Accept: application/json, text/plain, */* Content-Type: multipart/form-data; boundary=--------------------------147555471668548578107544 User-Agent: bruno-runtime/2.3.0 { "_overheadLength": 252, "_valueLength": 2, "_valuesToMeasure": [ { "fd": null, "path": "C:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a", "flags": "r", "mode": 438, "end": null, "bytesRead": 0, "_events": {}, "_readableState": { "highWaterMark": 65536, "buffer": [], "bufferIndex": 0, "length": 0, "pipes": [], "awaitDrainWriters": null }, "_eventsCount": 3 } ], "writable": false, "readable": true, "dataSize": 0, "maxDataSize": 2097152, "pauseStreams": true, "_released": false, "_streams": [ "----------------------------147555471668548578107544\r\nContent-Disposition: form-data; name=\"file\"; filename=\"录音 (6).m4a\"\r\nContent-Type: wav\r\n\r\n", { "source": { "fd": null, "path": "C:\\Users\\trphoenix\\Documents\\录音\\录音 (6).m4a", "flags": "r", "mode": 438, "end": null, "bytesRead": 0, "_events": {}, "_readableState": { "highWaterMark": 65536, "buffer": [], "bufferIndex": 0, "length": 0, "pipes": [], "awaitDrainWriters": null }, "_eventsCount": 3 }, "dataSize": 0, "maxDataSize": null, "pauseStream": true, "_maxDataSizeExceeded": false, "_released": false, "_bufferedEvents": [ { "0": "pause" } ], "_events": {}, "_eventsCount": 1 }, null, "----------------------------147555471668548578107544\r\nContent-Disposition: form-data; name=\"lang\"\r\n\r\n", "zh", null ], "_currentStream": null, "_insideLoop": false, "_pendingNext": false, "_boundary": "--------------------------147555471668548578107544" } SSL validation: enabled HTTP/1.1 200 OK date: Sun, 21 Sep 2025 08:22:21 GMT server: uvicorn content-length: 201 content-type: application/json request-duration: 6989 Request completed in 6989 ms

说明

paddlespeech中用的一个库有可能是单线程的,所以在高并发场景下,可能会出现响应变慢的情况,这个时候,可以考虑增加实例来解决这个问题。

实际使用时,如果想提高并发能力,可以尝试更改环境变量中的PYTHONUNBUFFFERED=4,或更高一个适合的数字,来提高并发能力。

《完》